Huawei delays production of its next AI accelerator as Nvidia plans a way to enter China

Huawei is reportedly delaying the production of its Ascend 910C AI accelerator.

Because of the sanctions placed against the company by the U.S., Huawei has to be content with having its AI accelerators dominate in China rather than compete in America. The U.S. government might have handed this business to Huawei on a silver platter since it would prefer that global leader Nvidia, whose GPUs are used as AI accelerators, not sell its new H20 chips in China. But Nvidia has come up with a way to take on Huawei in China.

This month, Nvidia will start production of the B40 AI accelerator. The B40 is made specifically for the Chinese market. Using Nvidia's latest Blackwell architecture, it is designed to perform at a level that will allow the component to be exported to China under U.S. export rules. With AI sizzling hot, high-profile customers in China have created a new rule for AI chip vendors that says, "Sell only what you already have in stock; if you fail to deliver, you’re in trouble."

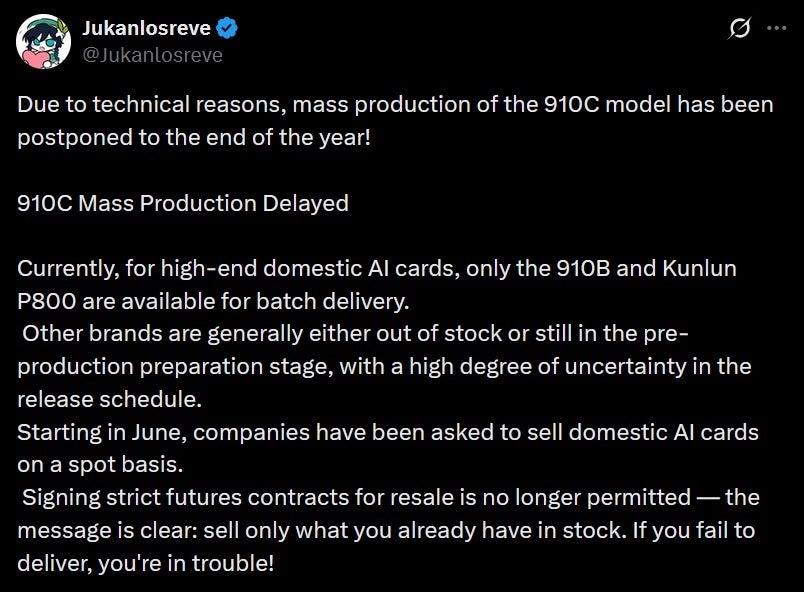

Huawei was planning on having its new AI accelerator, the Ascend 910C, built this month. However, a tweet from leaker @Jukanlosreve says that mass production of the chip has been pushed back to the end of the year due to technical reasons. Huawei has had supply chain problems and the tweet notes that Huawei's domestic rivals have no inventory available to sell or they are still in the middle of developing a competing chip.

When production does start at the end of this year, SMIC will build the Ascend 910C for Huawei on its second-generation 7nm process node known as N+2. TSMC, without having to deal with U.S. sanctions like SMIC does, builds Nvidia's H20 GPU chip using its 4nm 4N process node. As a result, the Ascend 910C could be slower and less energy efficient than Nvidia's H20.

Production of the Huawei Ascend 910C AI accelerator is delayed until the end of 2025. | Image credit-X

AI accelerators and GPUs employed as such use parallel processing which can perform the same calculations simultaneously on many different "pieces" of data. The ability to use parallel processing explains why GPUs, like Nvidia's GPU chips, are used as AI accelerators rather than CPUs. The latter handles its workloads sequentially which makes it less qualified for AI use.

Things that are NOT allowed: